January 8, 2026

Understanding the Google Core Update of December 2025: What It Means, Who It Affects, and How to Respond

January 8, 2026

Generative Engine Optimization (GEO): The Complete Guide to Ranking in AI Search

January 8, 2026

Author:

.png)

Search is no longer text-only. In 2025, Google, SearchGPT, Perplexity, and Bing Copilot analyze text, images, videos, diagrams, UI screenshots, and visual layouts to understand intent and determine the best possible answer.

Multimodal AI models like GPT-4o, Gemini, and Claude 3.5 can interpret visuals with almost the same accuracy as written content. They recognize objects, read text inside images, understand diagrams, compare screenshots, and evaluate whether a visual element provides real value to the user.

This shift fundamentally changes SEO.

Visuals are no longer “decorations” or supportive assets — they actively influence rankings, citations in AI answers, and inclusion in AI Overviews. Pages with clear, original, contextual visual elements are consistently favored by both Google and AI search engines.

“In 2025, visuals are not supporting content — they are ranking signals.”

Multimodal search means your page is evaluated holistically: factual clarity, visual clarity, design clarity — all at once.

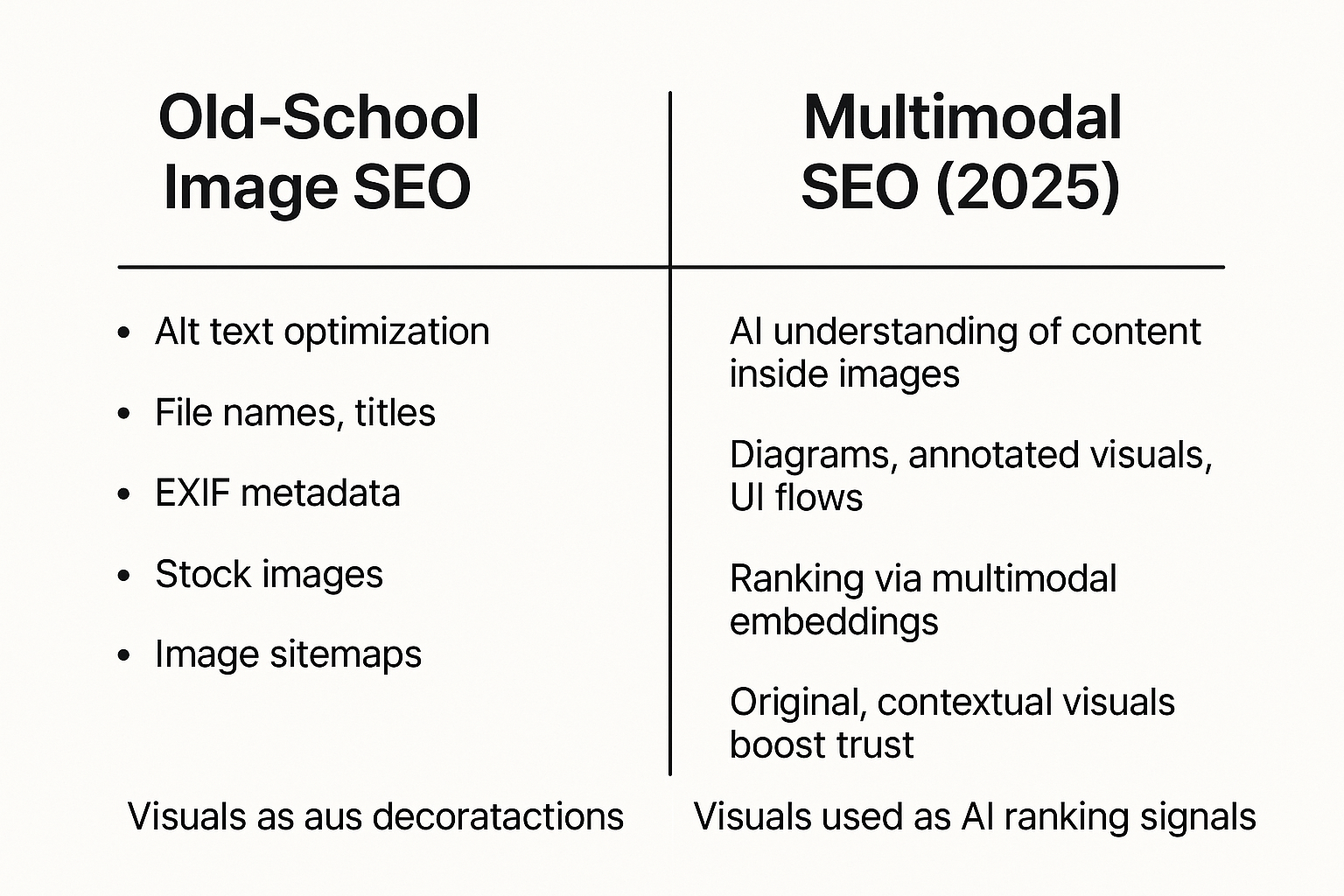

Multimodal SEO is the practice of optimizing content for AI systems that understand both text and visual information together.

These models don’t treat images as separate assets — they integrate them into their understanding of the page’s meaning, relevance, and expertise.

Modern AI can:

AI forms a unified representation — a multimodal embedding — that includes text + visuals + layout.

Google increasingly considers visuals part of the search intent, because users often expect:

If your page includes the exact visual format that matches the intent, Google is more likely to surface it in:

The new era of SEO is multimodal — and visual assets now matter as much as written content.

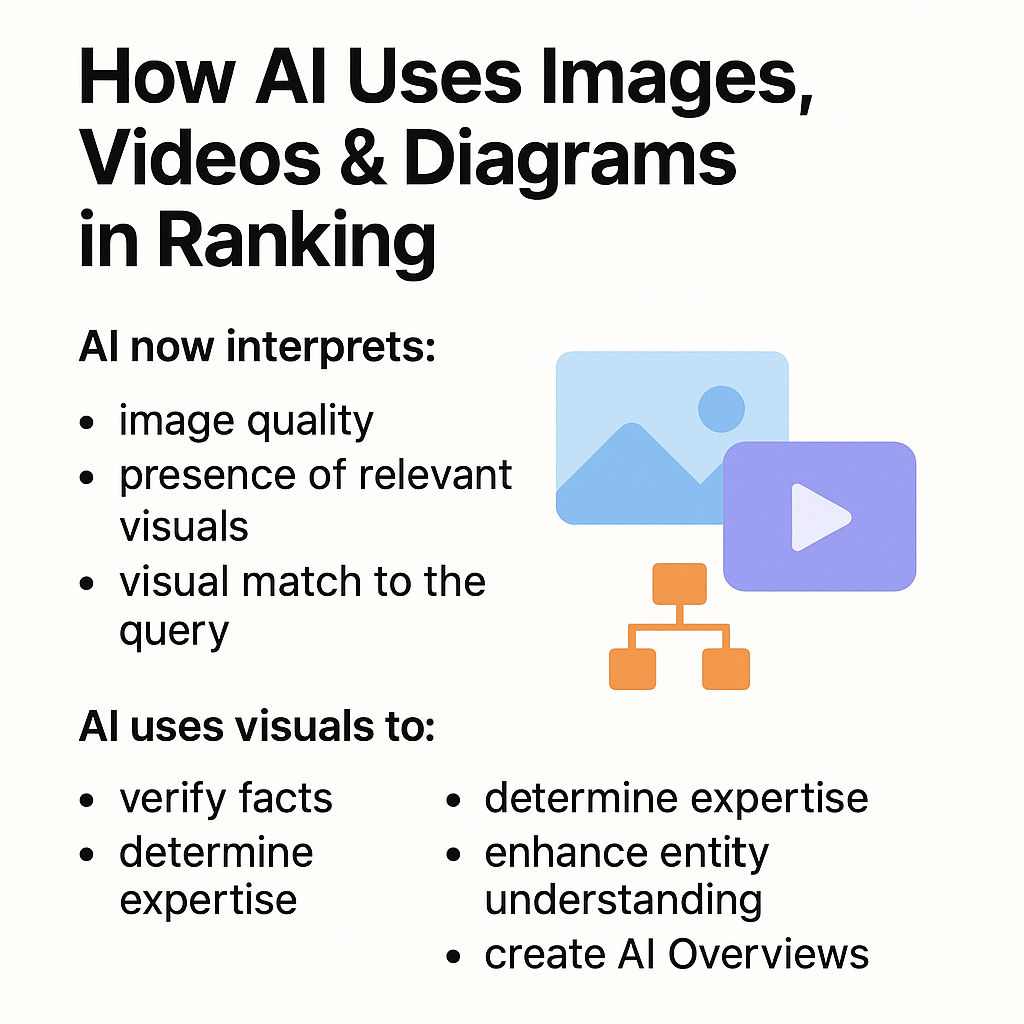

AI search engines are no longer blind to visual content — they actively interpret, classify, and evaluate it as part of the ranking process. Modern multimodal models “see” visuals with near-human accuracy and use them to validate meaning, expertise, and intent coverage.

• Image quality

AI prioritizes visuals that are clear, original, and contextually relevant. Blurry, generic, or low-effort images reduce trust signals.

• Contextual relevance

AI checks whether the visual actually supports the surrounding text.

If the image matches the query intent (e.g., a product photo in a product review), ranking probability increases.

• Visual–query alignment

For many searches (e.g., “how to fix…”, “best laptop 2025”), AI expects specific visual formats. Matching the visual expectation improves ranking.

• Diagrams and annotated graphics

AI models extract meaning from diagrams — process flows, comparison charts, feature maps.

These visuals help the model understand the topic, not just “decorate” the page.

• Video content

AI can parse videos, identify steps, objects, and voices, and integrate them into intent coverage.

A relevant video increases your chance of winning both SERP and AI Overview positions.

AI is not simply looking at images — it is using them to make decisions.

1. Fact Verification

Visuals help AI confirm that the text is accurate.

Example: A diagram explaining a product feature validates the written description.

2. Determining Expertise

Original photos, UI flows, or product demos signal hands-on experience — a strong differentiator for EEAT and AIAT.

3. Enhancing Entity Understanding

Images associated with a brand contribute to the model’s internal entity embedding.

Consistency across visuals = higher trust.

4. Feeding AI Overviews (AIO)

AI Overviews prefer pages with multimodal clarity.

If your page includes strong visuals, it becomes a more likely source for inclusion.

5. Generating Direct Recommendations

When AI makes product recommendations (“best”, “top”, “for beginners”), visuals help models determine:

This is why multimodal content performs dramatically better across all AI-driven search engines.

Visuals influence ranking because AI treats them as multimodal trust signals.

They represent clarity, accuracy, and experience — exactly what modern AI values.

• Trust

Original photos, screenshots, and unique diagrams prove that your content is real and not autogenerated.

• Authority

Structured visuals (infographics, charts, step-by-step diagrams) signal expertise — something AI uses to rank authoritative sources.

• Clarity

Visuals simplify complex topics, allowing AI to extract meaning more easily.

Clear information is a ranking advantage.

• Experience (EEAT)

Real photos, real product shots, or actual UI usage strengthen “Experience” — one of the most weighted signals in modern AI evaluation.

• Completeness

When visuals cover parts of the user’s intent (e.g., showing how something works), the page becomes semantically complete.

AI ranks complete answers higher.

This is what AI systems call multimodal grounding — aligning text + visuals + structure to build a coherent, trustworthy representation of information.

Modern AI models recognize the difference between generic visuals and meaningful, original content. The following types of visuals significantly strengthen your SEO performance in the multimodal era:

These formats help AI confirm expertise, understand intent, and identify the page as a high-value resource.

1. Create visuals with intent

Every visual must answer a specific user question or support a search intent. AI models score “purposeful visuals” higher.

2. Pair visuals with meaningful text

AI ranks pages better when images and videos are supported by:

This improves semantic alignment.

3. Implement multimedia structured data

To help AI interpret visuals correctly, add:

Structured data acts as the “glue” between visuals and meaning.

4. Use original media to boost trust

AI penalizes generic, overused images. Authentic visuals increase trust and entity credibility.

5. Place visuals strategically

Put key visuals:

AI models use placement as a relevance signal.

AI does not “see images” the way humans do. It processes visuals using:

In simple terms:

“AI doesn’t see pixels — it sees meaning.”

This is why visuals have become full-fledged ranking signals in the multimodal SEO era.

Google, Bing, and GPT-based engines now integrate visuals directly into AI Overviews. These visuals influence whether your page is selected as a source:

AI Overviews use visuals to enhance:

Pages containing clear, original, context-aligned visuals have a significantly higher chance of being included in AIO sources.

If your page lacks visuals, AI Overviews often choose competitors.

Multimodal SEO already works in practice — across multiple industries:

Any page that “shows” instead of only “telling” performs better in AI-driven search.

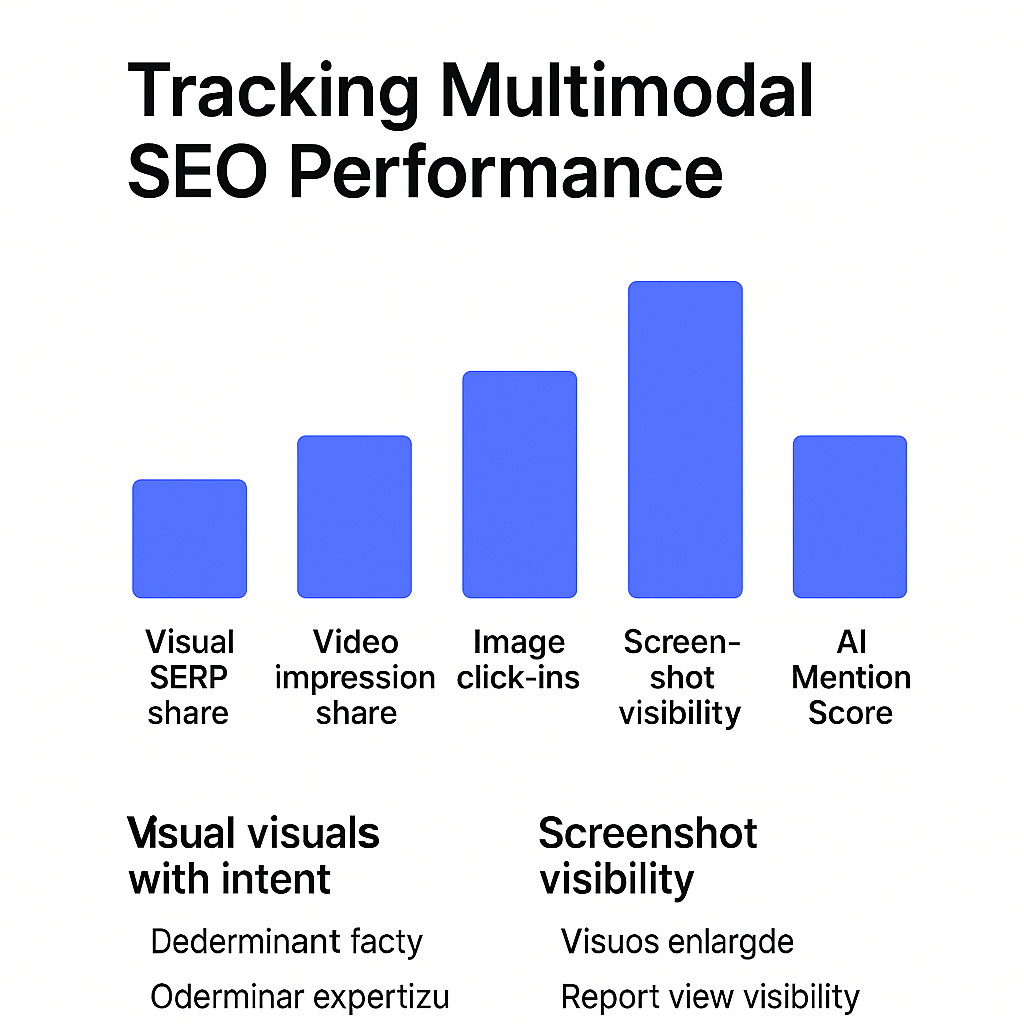

Traditional SEO metrics only measure text-based ranking.

Multimodal SEO requires a new measurement stack:

These metrics reveal how well your content performs in a multimodal search environment — not just a text-based one.

Multimodal SEO is only at the beginning. Over the next few years, the shift will accelerate as search engines and AI models rely more on visual semantics than plain text.

Here’s what the future looks like:

Search engines will increasingly weight:

AI models prefer content that provides meaning in multiple formats — not just paragraphs.

AI Overviews, Bing Deep Answers, and SearchGPT will blend:

If your page lacks visuals, you risk not being included at all.

AI will validate:

Fake, generic, or low-effort visuals will get filtered out.

Brands that consistently use:

…will be ranked as more credible and more authoritative.

Many answers will require no clicks:

Your visuals must “carry” the answer inside AI output.

A new discipline will emerge:

VXO will sit next to SEO, UX, and CRO as a core practice.

Your new AI assistant will handle monitoring, audits, and reports. Free up your team for strategy, not for manually digging through GA4 and GSC. Let us show you how to give your specialists 10+ hours back every week.

Read More

January 8, 2026

10 min

January 8, 2026

10 min

January 8, 2026

10 min

Just write your commands, and AI agents will do the work for you.